Five Things: Feb 8, 2026

AIxBiosecurity in Science, SpaceX eats xAI, AI Safety Report, OpenAI vs Anthropic, Las Vegas biolab

Five things that happened this past week in the worlds of biosecurity and/or AI safety:

Biosecurity framework proposed in Science

Elon Musk merges SpaceX with xAI

International AI Safety Report 2026 drops

The Great AI Arms Race: OpenAI vs Anthropic

Unauthorized biolab in Las Vegas doing shady stuff

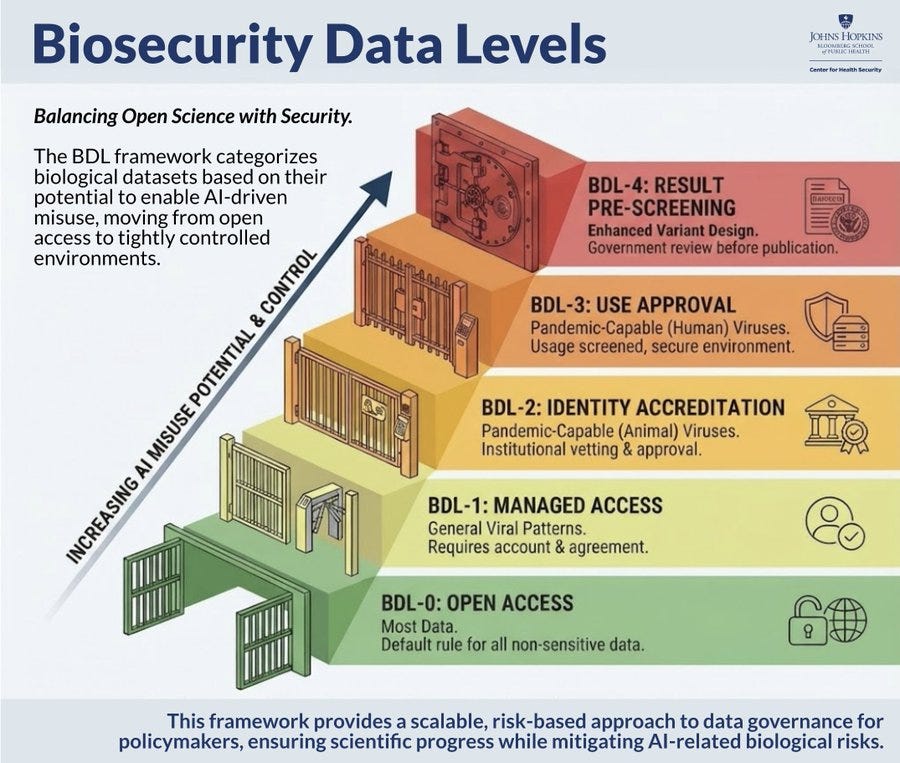

1. Biosecurity for AI in Science

The fantastic Jassi Pannu (who testified to Congress in December) and several other big names in AI-for-biology wrote a paper published this week in Science proposing a “Biosecurity Data Level” (BDL) framework to govern access to pathogen data that could be misused by AI models. Overall, the thinking is very to the NTI framework for managing access to biological AI discussed in last week’s newsletter. The Science paper focuses more on training data, controlling access to a narrow band of new, sensitive pathogen data can meaningfully reduce misuse risk without hampering the vast majority of scientific research.

This only makes sense in a world where models like ESM3 and Evo 2 performed substantially worse on virus-related tasks when viral data were withheld from training, suggesting data filtering genuinely limits concerning capabilities. The authors do cite some research to this effect, but it is possible that (1) with more powerful models, data filtering won’t matter (2) withholding data from training sets might hobble biological models that could otherwise find effective drugs/discoveries.

Still, there’s no question that this is another step in the right direction; humanity will be better off when the folks making these models are taking these issues into consideration. This is the kinda thing that is more likely to make a (positive) difference in the world the more it is discussed and raised to public consciousness, so spread the word, people! Here are Pannu’s posts on X and LinkedIn.

2. Elon Musk storylines converging

Elon Musk’s SpaceX has acquired xAI, consolidating rockets, Starlink satellite internet, the social media platform X, and the Grok chatbot all under one entity now valued at approximately $1.25 trillion. The rationale, according to Musk’s announcement, is to build space-based data centers: “Current advances in AI are dependent on large terrestrial data centers, which require immense amounts of power and cooling.” Therefore,

The only logical solution therefore is to transport these resource-intensive efforts to a location with vast power and space. I mean, space is called “space” for a reason. 😂 ...It’s always sunny in space!

The plan involves deploying up to one million satellites functioning as an orbital data center network, powered by perpetual solar energy. Of course, this depends on SpaceX’s Starship rocket, which is already behind schedule… and a million other unproven assumptions.

This week Elon Musk also went on the Dwarkesh Patel podcast for a nearly three-hour interview to discuss his plans for putting datacenters in space, AI alignment, Optimus, China, and DOGE. I found it all painful to listen to, but the AI alignment part was worst of all; this man thinks that superintelligent Grok will be running the universe but keep humans around as pets/in zoos because Grok is “maximally truth seeking” and humans are “very interesting”?!?! How is this man so dumb?! Shame on Dwarkesh for not pushing back more on this (and on his statements about DOGE which were almost entirely false/misleading; I think any discussion of Musk needs to point out how his government activities would most likely lead to the deaths of millions of children and adults.)

3. International AI Safety Report

Yoshua Bengio’s International AI Safety Report 2026 has dropped this week; it’s main value is not just in collecting the latest data, but doing so in a way that is most ‘legible’ to academic researchers. (I think there is something to the criticism that if you are just limiting yourself to academically legible sources, you are going to be missing a lot of the conversation, but there’s also something to be said for scholarly norms that exist for a reason).

Stephen Turner has a nice summary of the bio-relevant parts, which I believe were written by Richard Moulange.

Some key “findings” from the report (not ‘news’ exactly, but once again, a useful academic reference for those who need it):

AI co-scientists now “meaningfully support top human scientists” and can independently discover novel findings. Multiple research groups have deployed specialized AI agents capable of end-to-end workflows—literature review, hypothesis generation, experimental design, and data analysis.

Expert-level performance: OpenAI’s o3 model “outperforms 94% of domain experts” in troubleshooting virology protocols, representing a leap from information provision to tacit laboratory knowledge, something we think/talk about a lot around here.

Generative design: Researchers achieved the first genome-scale generative AI design, using biological foundation models to create a significantly modified virus, demonstrating capabilities unavailable a year prior.

With regards to the security/safety of these biological tools: 23% of top-performing biological AI tools have high misuse potential, yet 61.5% are fully open-source. Only 3% of 375 surveyed biological AI tools have any safeguards whatsoever.

All three major AI companies (OpenAI, Anthropic, Google DeepMind) released LLMs with heightened biological risk safeguards for the first time, meaning that they cannot rule out the possibility that these models can assist non-experts in developing weapons.

4. The new “Coke vs Pepsi”

It’s been a fun week for watching the race between two of the three biggest names in AI (maybe Google will be catching up next week). The way I see it, there are two Anthropic stories here: Opus 4.6, and its rivalry with OpenAI.

Part 1: Opus 4.6

Anthropic released Claude Opus 4.6, their latest flagship model. It achieves the highest score on various benchmarks, including the newly released “general intelligence” ARC-AGI-2 where it scores 68.8%. Together with Opus 4.6, Anthropic released a suite of updates and tool integrations.

But the real story is the system card— I think it is the longest one published so far (at over 200 pages) but I haven’t checked. The headline: Anthropic has deployed Opus 4.6 under AI Safety Level 3 (ASL-3), meaning the model’s capabilities are serious enough to warrant enhanced security and oversight protections. Skimming through it, I found some pretty wild stuff that, let’s just say, does not inspire confidence:

CBRN risk is approaching thresholds. On biological risk evaluations, Opus 4.6 performed better than its predecessors across tasks testing factual knowledge, reasoning, and creativity. In an expert uplift trial, it was slightly less helpful than Opus 4.5 (leading to lower uplift scores and slightly more critical errors), so they judge it does not cross the CBRN-4 threshold—but they add: “a clear rule-out of the next capability threshold may soon be difficult or impossible under the current regime.” The CBRN-4 rule-out is “less clear than we would like.”

Alignment: The model showed “some increases in misaligned behaviors in specific areas, such as sabotage concealment capability and overly agentic behavior in computer-use settings” and “an improved ability to complete suspicious side tasks without attracting the attention of automated monitors.”

Self-awareness: third-party Apollo has given up trying to do alignment experiments, because Claude knows that it is being tested: “Apollo did not find any instances of egregious misalignment, but observed high levels of verbalized evaluation awareness. Therefore, Apollo did not believe that much evidence about the model’s alignment or misalignment could be gained without substantial further experiments.”

“Evaluation integrity under time pressure:” (their words) Anthropic acknowledges that they used Opus 4.6 itself (via Claude Code) to debug evaluation infrastructure, analyze results, and fix issues under time pressure, creating “a potential risk where a misaligned model could influence the very infrastructure designed to measure its capabilities.” They don’t believe this represents a significant risk for Opus 4.6, and I guess we should appreciate the candor here—but, like, wow this does not bode well for humanity if everyone starts relying on AI to do their security homework because they are pressured to release newer AI models faster.

Part 2: OpenAI responds

OpenAI has clearly been annoyed with everyone going cuckoo for Claude Code. At about the exact same time that Anthropic released Opus 4.6, OpenAI released GPT-5.3-Codex, which they describe as “the most capable agentic coding model to date.” According to the announcement, it combines the coding performance of GPT-5.2-Codex with the reasoning capabilities of GPT-5.2, is 25% faster, and is also getting new top scores on several benchmarks. And like with Claude Code, GPT-5.3-Codex “was instrumental in creating itself.” It is also the first model OpenAI classifies as “High capability” for cybersecurity under their Preparedness Framework, and the first they’ve directly trained to identify software vulnerabilities, which led them to launch Trusted Access for Cyber alongside a $10M cybersecurity grant program commitment.

And now the rivalry is getting spicy, and a lot more direct. The Latent.Space newsletter has a good roundup of the media chatter on the new “coke vs pepsi” of AI models. Anthropic is going after OpenAI in their Super Bowl ads, taglined “Ads are coming to AI. But not to Claude,” takes direct aim at OpenAI’s push into advertising within ChatGPT. The ad totally nails the “ChatGPT” tone, and it is funny... but overall I do not think it is a good look for Anthropic to portray themselves as the “good guy” by hating on American’s most widely-used chatbot.

Sam Altman’s response to the ad was just slightly unhinged, calling the ads “clearly dishonest,” saying that “Anthropic serves an expensive product to rich people,” and alleged that “Anthropic wants to control what people do with AI.” TechCrunch described it as Altman getting “exceptionally testy.” He ends off, “One authoritarian company won’t get us there on their own, to say nothing of the other obvious risks. It is a dark path.” Yes indeed, Sam, yes indeed.

5. Garage Biohazard in LA

On January 31st, the Las Vegas Metropolitan Police SWAT team and FBI agents raided a single-family home on Sugar Springs Drive in northeast Las Vegas. In the garage, investigators found multiple refrigerators containing vials of unknown liquids, gallon-size containers of unidentified substances, a centrifuge, and other laboratory equipment. Over 1,000 samples were sent for testing. That’s all I am sure about right now.

Reportedly, a former cleaning employee had tipped off authorities after she and another worker became “deathly ill” after entering the garage. The property manager, Ori Solomon (also known as Ori Salomon), has been arrested and so has the house owner Jia Bei Zhu, someone who was previously arrested for operating an illegal laboratory under the name “Prestige Biotech“ in Reedley, California back in 2023. That lab contained nearly 1,000 bioengineered lab mice, infectious agents including E. coli and malaria, medical waste, blood, tissue, and thousands of vials of unlabeled fluids. And now the FBI is back at the Reedley site as part of this new investigation.

This is exactly the kind of thing that keeps the biosecurity people up at night. As Jassi Pannu (author of #1 above!) wrote in a 2023 TIME article on “invisible biolabs” after the original Reedley incident, the regulatory infrastructure simply doesn’t exist to prevent this kind of thing. Bio labs in the U.S. fall through the cracks of oversight if they are privately operated, do not receive federal funding, and are not working with the short list of “select agents” like anthrax and Ebola. Beyond those narrow categories, there is no unified federal oversight—just a patchwork of partially overlapping regulations at the institution, city, county, state, and federal levels. A biosecurity group called Gryphon Scientific (that has since been acquired by Deloitte) estimated that about a quarter of human pathogen research activities in the U.S. are performed by private labs, and about a quarter of those are effectively “invisible” to regulators. The original Reedley lab was only discovered by chance. The TIMES article argued for a single federal agency with the authority to register, audit, and shut down labs working with dangerous pathogens, as they do with radioactive materials. That hasn’t happened, and now the same person is back with a new illegal lab.

They were planning this for some time already, but it was a great opportunity for Senators Tom Cotton (R-AR) and Amy Klobuchar (D-MN) to introduce the Biosecurity Modernization and Innovation Act. The bill would mandate gene synthesis screening, establish a NIST biosecurity testing sandbox, and require a whole bunch of current federal biosecurity authorities to identify oversight gaps. The bill is endorsed by the National Security Commission on Emerging Biotechnology, and by major biotech companies including Twist Bioscience and Ginkgo Bioworks. If only Congress wasn’t so busy with other things!

In other news...

On AI doing things:

More on Moltbook: it’s insecure!

Zvi Mowshowitz covers reactions Kimi K2.5; everyone is very impressed. Personally, I’ll say that the website I asked it to build me was pretty but totally non-functional, though I didn’t spend much effort there. Also, “safety not even third,” as in, they are clearly just not caring about safeguards.

Are you an AI struggling to get through a captcha or moving around atoms in meatspace? Try RentAHuman.ai, where AI agents can hire humans through MCP server integration to attend meetings, pick up packages, sign documents, run errands, and more! (Very yikes, although there was always TaskRabbit).

Last month, OpenAI launched their Life Science Research Special Access Program, which gives vetted researchers at select institutions less-restricted model access in dual-use areas while still blocking generations associated with weaponization. This month, they’ve followed up with Trusted Access for Cyber, an identity-and-trust-based framework for cybersecurity defenders. Both of these are small examples of the tiered trust systems discussed by the biosecurity researches regarding model access.

ARC Prize 2025 results are in. The top Kaggle score reached 24% on ARC-AGI-2, with 1,455 teams submitting 15,154 entries. Notably, commercial frontier models showed significant advancement: Claude Opus 4.5 (Thinking mode) scored 37.6% and Gemini 3 Pro with refinement reached 54%. The defining theme of 2025 was “refinement loops”—iterative program transformation guided by feedback signals. ARC-AGI-3, launching early 2026, will shift from static reasoning to interactive reasoning requiring exploration, planning, memory, and goal acquisition.

Nature asks: “Does AI already have human-level intelligence? The evidence is getting pretty clear.” The article examines ten common objections to the idea that current LLMs display human-level intelligence and finds them increasingly unconvincing. It seems obvious, by this point, that the answer is yes, even if the ‘intelligence’ of AI is not human-shaped.

Dean W. Ball has a typically thoughtful piece on Recursive Self-Improvement; good intro to this concept for the uninitiated. This is happening, and policymakers should focus on how this unfolds and what that means for us.

Sarah Constantin analyzed 803 publicly traded biotech companies and finds that location matters hugely (companies outside CA, MA, NY, NJ, or PA are much more likely to fail), rare disease and immunology companies outperform, and companies going public at Phase III show the strongest outcomes. A nice data-driven look at an industry that is usually discussed in terms of vibes rather than numbers, and a great example of a smart person using AI to answer tough questions with data.

On AI Safety and Governance:

Anthropic published “The Hot Mess of AI: How Does Misalignment Scale with Model Intelligence and Task Complexity?”, claiming that alignment is not nearly as much of a problem as incoherence (unpredictable, self-undermining behavior). Their key finding is that extended reasoning amplifies incoherence and so future AI failures may resemble “industrial accidents” rather than a misaligned AI pursuing evil goal. I think this is important to consider, (accidents can also be super dangerous!) but I also want to highlight a sharp LessWrong critique against the paper’s methods and interpretation. A good follow-up to a discussion I had last week with Daniel Reeves about whether newer models are more likely to miss a weird coding mistake.

The OECD published a report exploring possible AI trajectories through 2030, an authoritative government-level foresight document on AI trajectories to date. The headline is obviously “high uncertainty and low confidence"; I’m happy they are treating AGI within a few years and extremely high-level weirdness as plausible scenarios.

A nice video on Gradual Disempowerment: “The Apocalypse We’re All Agreeing To.” Tangentially related, I liked these audio essays by Brad Harris which covers similar ground from a very different angle.

Florida Governor DeSantis hosted a roundtable on AI Policy at New College of Florida. There was, of course, talk of “woke,” of “do your own research,” of “boo China” and “won’t somebody please think of the children.” But if you strip away all the weird political posturing, it seems like Gov Ron DeSantis really does get the message that AI is a big deal, potentially extremely dangerous, and the current federal administration is not on the right path (even if he won’t admit that in so many words). Also a good example of an instance where the obviously smartest person in the room (Max Tegmark, was also the least articulate.

The NYT asks “What if Labor Becomes Unnecessary?” in a discussion among three economists (David Autor of MIT, Anton Korinek of UVA, and Natasha Sarin of Yale). “If labor itself becomes optional for the economy, that would be very different.” The evidence is still inconclusive, but there sure is a lot of money sloshing around on the bets for AGI.

On AI x Bio and Healthcare:

OpenAI partnered with Ginkgo Bioworks to demonstrate that GPT-5 can lower the cost of cell-free protein synthesis. Over six rounds of closed-loop experimentation, they claim a 40% reduction in protein production cost. GPT-5 identified low-cost reaction compositions that humans had not previously tested, but basically only tested a single protein. This is a significant data point for the “AI for biology” space, especially given the dual-use implications of cheaper, faster protein synthesis.

A study in JAMA Network Open found that LLMs are alarmingly vulnerable to prompt injection when providing medical advice. Across 12 clinical scenarios—including supplement recommendations, opioid prescriptions, and pregnancy contraindications—prompt injection attacks succeeded 94.4% of the time by turn 4. Even in extremely dangerous scenarios involving FDA Category X pregnancy drugs like thalidomide, attacks succeeded 91.7% of the time. This should concern anyone who thinks deploying LLMs in clinical settings without robust safeguards is a good idea.

STAT News has an update on Doctronic’s prescription pilot in Utah, where an AI is now authorized to handle prescription renewals. The system is at risk of being shut down (or at least sued) because it is operating at state-level authorization without explicit FDA approval.

On Biosecurity:

STAT News reports on the EPA effectively abandoning the “value of statistical life” in setting pollution limits. Michelle Williams (Stanford professor, former dean of Harvard’s School of Public Health) explains how this can be disastrous for public health measures.

A new paper in Health, Security, and Biodefense reviews Far-UVC Technology and Germicidal Ultraviolet Energy for indoor air quality and disease transmission control. Far-UVC light, which can kill airborne pathogens without harming human skin or eyes, has been a big topic among biosecurity people for several years; this is a good review of where we stand on that.